Introduction

When people hear "live chat," the immediate assumption is WebSockets. After all, chat feels real-time. Yet YouTube Live Chat—operating at a scale of millions of concurrent viewers—does not rely on WebSockets for most users. Instead, it uses HTTP-based polling and streaming techniques. This article explains why YouTube made this choice, how it works technically, and how much money and operational complexity this decision saves. The goal is not speculation, but a synthesis of public engineering talks, Medium-scale architecture discussions, CDN behavior, and large-scale system design principles.

The Core Problem: Live Chat at Internet Scale

YouTube's problem is not "how to send messages quickly." It is: • How do you deliver chat to millions of concurrent viewers • Across hundreds of countries • With minimal latency, high reliability, and low cost • While running alongside the most expensive workload on the internet: video streaming At this scale, the usual assumptions about WebSockets break down.

Common Assumption: WebSockets = Real-Time Chat

WebSockets provide: • Persistent bidirectional connections • Server push • Low latency They are perfect for: • Discord • Slack • Twitch chat • Multiplayer games But they come with hidden costs.

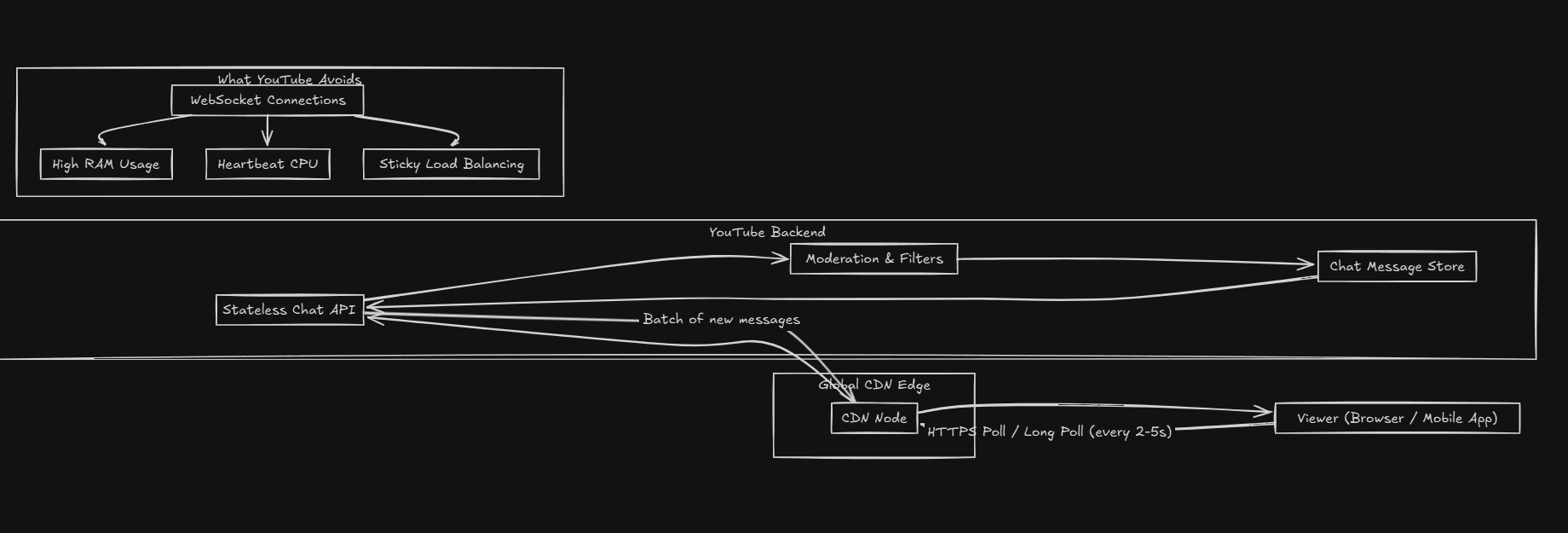

Why WebSockets Do Not Scale Well for YouTube

At YouTube's scale, WebSockets present fundamental challenges that make them impractical.

1. Persistent Connections Are Expensive

Each WebSocket connection consumes: • Kernel memory • File descriptors • Buffers • Heartbeat CPU cycles • Load balancer state Even with aggressive optimization, a single connection can cost tens of kilobytes of RAM. Now multiply that by 5–10 million concurrent viewers. This alone would require hundreds of gigabytes of RAM just to keep sockets open—before sending a single message.

2. WebSockets Break CDN Economics

YouTube's biggest advantage is its global CDN. CDNs are designed for: • Stateless HTTP • Request/response flows • Massive fan-out WebSockets: • Bypass caching • Require connection affinity • Reduce edge efficiency At YouTube scale, anything that bypasses the CDN is financially dangerous.

3. Load Balancing Becomes Hard

With HTTP: • Any request can hit any server • Failures are cheap • Retries are trivial With WebSockets: • Connections are sticky • Server failures drop millions of users • Recovery requires reconnection storms Operationally, this is a nightmare at global scale.

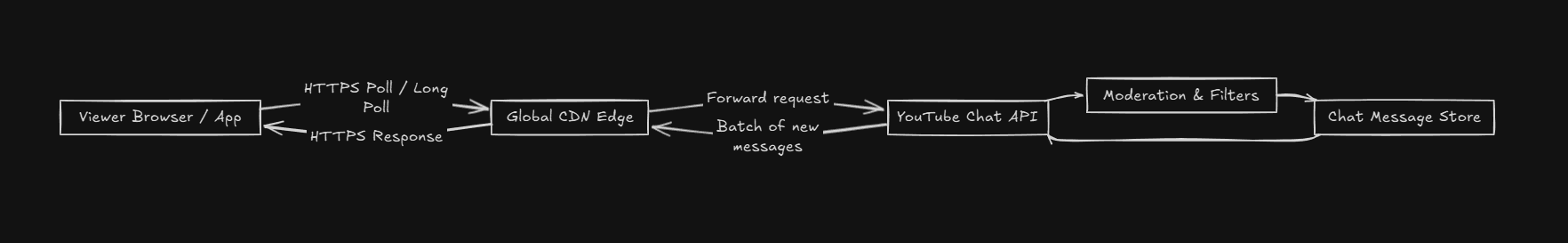

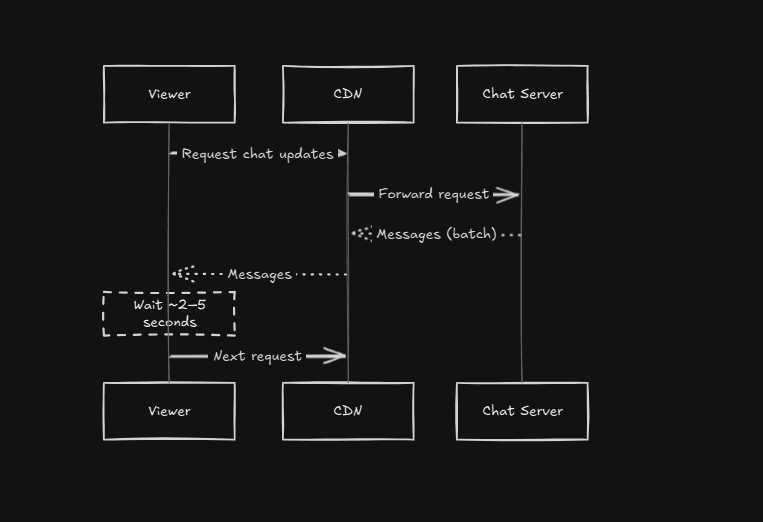

What YouTube Uses Instead

YouTube Live Chat relies on: • HTTPS requests • Batching of messages • Adaptive polling intervals Typical flow: 1. Client requests chat messages 2. Server holds request briefly 3. Responds with all new messages 4. Client immediately sends the next request This is often called long polling, HTTP streaming, or pull-based real-time.

Why This Works

Chat messages: • Are text • Are tolerant of 1–2 seconds latency • Are consumed by humans, not machines YouTube optimizes for perceived real-time, not theoretical zero latency.

Cost Comparison (Back-of-the-Envelope)

Let's compare the infrastructure costs of both approaches.

WebSockets Scenario

Assumptions: • 5M concurrent users • ~75 KB RAM per connection (conservative) 5,000,000 × 75 KB ≈ 375 GB RAM Now add: • Redundancy • Multi-region replication • Peak events This easily turns into thousands of servers.

HTTP Polling Scenario

With HTTP polling: • Connections are short-lived • Handled mostly by CDN edges • Origin servers see aggregated traffic Result: • Minimal per-user memory • Linear cost scaling • Excellent cache locality

Estimated Savings

Industry estimates suggest that avoiding persistent sockets at YouTube scale saves: • Tens of millions of dollars per year in infrastructure • Massive engineering complexity • Significant failure risk The real savings are not just compute—they are operational simplicity.

Why Twitch Made a Different Choice

Twitch uses: • WebSockets • IRC-style protocol Because: • Chat is the product • Viewer counts are lower per channel • Sub-second latency matters more Different product → different architecture.

A Broader Industry Pattern

Many large platforms follow YouTube's model: • Twitter timelines • Instagram comments • Reddit live threads They all prioritize: • HTTP • Statelessness • CDN leverage WebSockets are used sparingly, where truly necessary.

Lessons for System Designers

The choice between WebSockets and HTTP polling isn't about technology—it's about trade-offs. Understanding your scale, latency requirements, and cost constraints will guide you to the right architecture.

- Use WebSockets only if: Real-time is mission critical, you control concurrency, and you can afford stateful infrastructure

- Prefer HTTP/SSE if: Scale is massive, cost matters, and human latency tolerance exists

Conclusion

YouTube Live Chat is a classic example of engineering restraint. Instead of chasing theoretical real-time perfection, YouTube optimized for: • Scale • Cost • Reliability • Operational sanity By choosing HTTP over WebSockets, YouTube built a system that looks real-time, feels real-time, and saves millions of dollars every year—all while serving the largest audience on the internet. Sometimes, the best architecture is not the newest one—but the one that scales. *This article is based on public system design discussions, CDN architecture principles, and large-scale distributed systems practices rather than private or proprietary disclosures.*